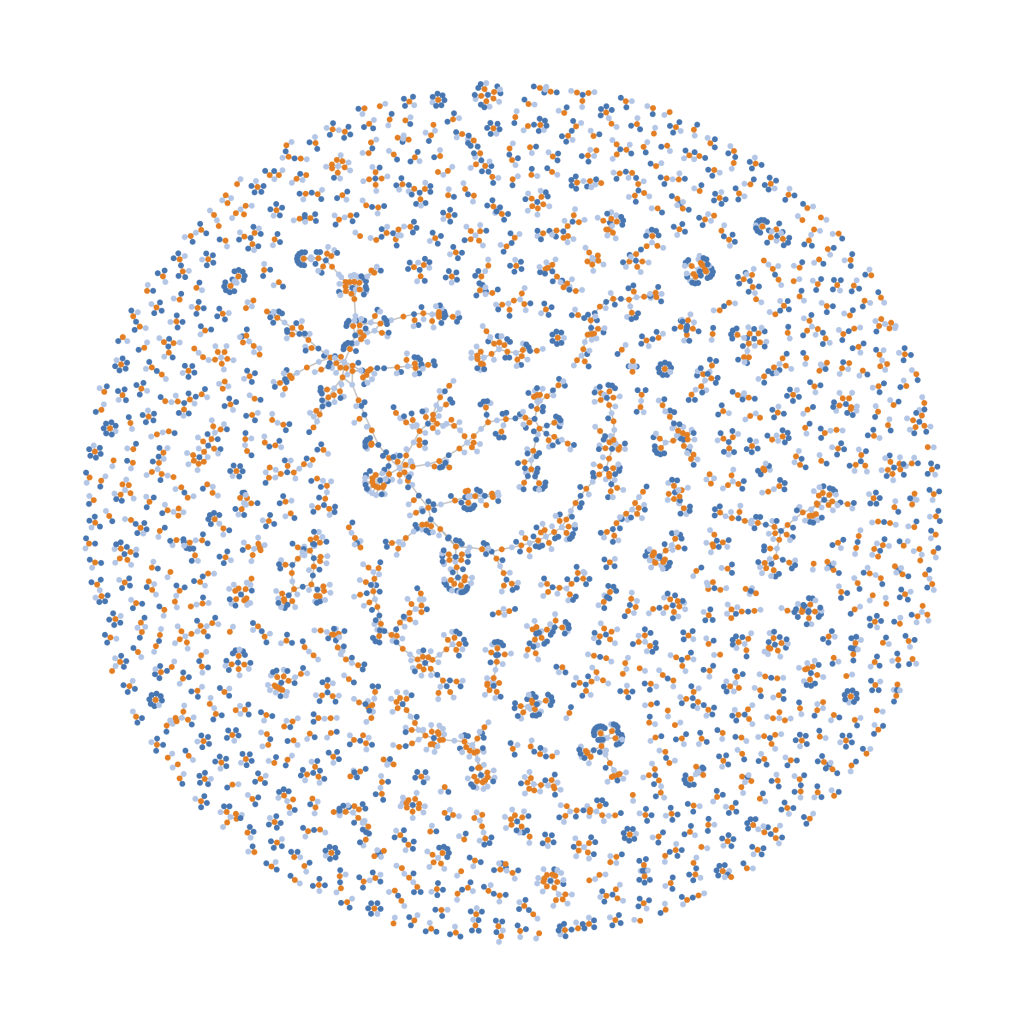

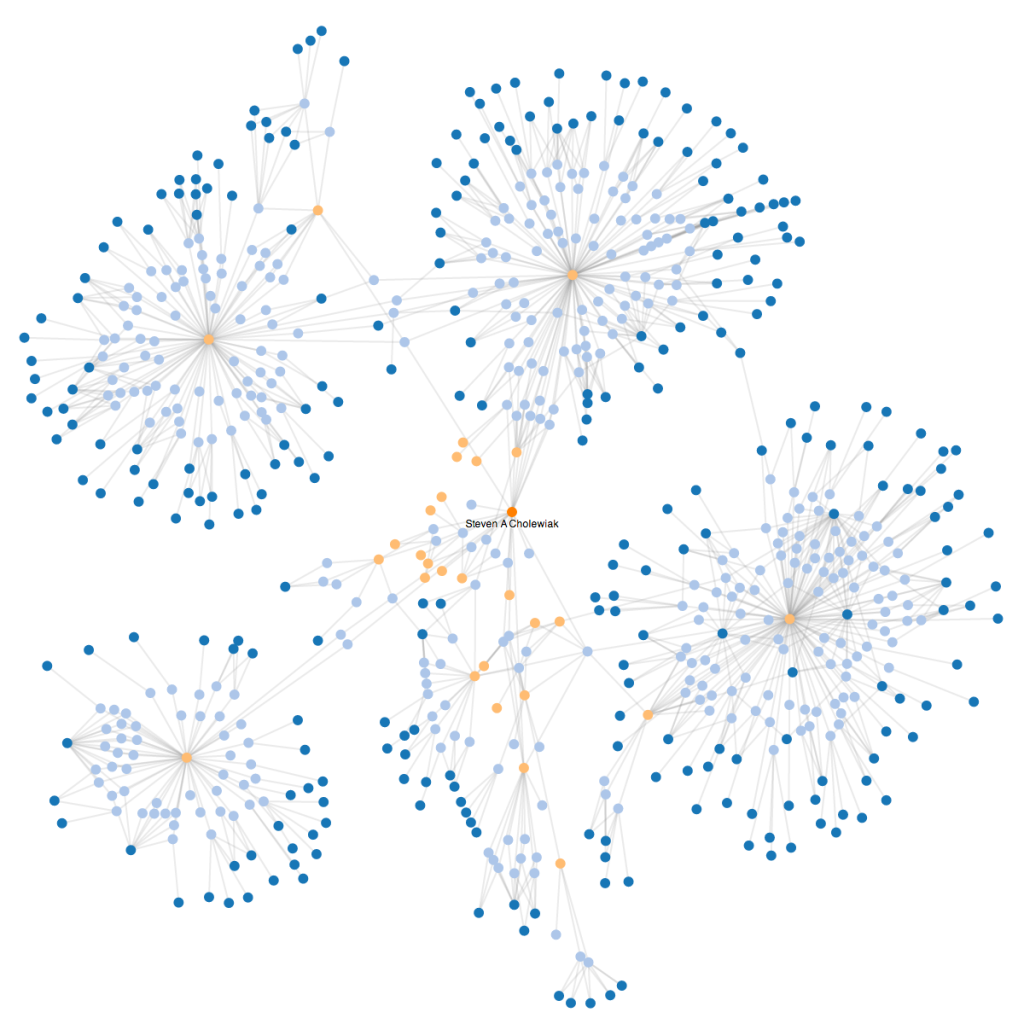

Another post, another neat force-directed graph. This one illustrates the interconnections between professors and students who have been co-authors on some of my papers and presentations, as scrapped from Google Scholar citations. It could be described as the first version of a rough illustration of my 2 degrees of separation in academia.

The dark orange circle in the center is myself, light blue circles are papers/presentations, light orange circles are co-authors, and dark-blue circles are co-authors of my co-authors (i.e., have not necessarily directly worked with me on a project).

Unfortunately, as of today, not all of my co-authors have Google Scholar pages, so there are a number of co-authors whose connections and branches are under-represented. In addition, Google Scholar does not necessarily accumulate all of a given author’s papers/presentations and often makes mistakes misattributing papers to profiles. So, the veracity of the information represented here should be taken with a grain of salt unless I find a better service for generating these networks.

For some more information on how this was created, click-through to the post.